Published on: 2024-11-17

📝 Introduction

A growing wave of criticism has emerged claiming that building apps is now effortless thanks to AI. This assumption often comes from those who haven’t actually attempted to create anything beyond a few prompts. In reality, crafting a fully functional app — even with AI tools — still demands architectural thinking, coding proficiency, testing, and integration.

While AI tools aren’t perfect, dismissing them without firsthand experience is not just short-sighted — it misunderstands how modern development is evolving.

Prompt Gramming: Because Real Devs Test the Prompt.

🤔 What AI Actually Offers in App Development

Let’s be clear: AI won’t replace architects, engineers, or thoughtful planning. But it can offer:

- Boilerplate code generation (Next.js, Flask, Node, etc.)

- Rapid scaffolding of CRUD operations

- Real-time documentation lookup

- Instant syntax help

- Suggestions for integration patterns (APIs, DBs, auth, etc.)

The power isn’t in automating everything. It’s in accelerating the boring parts and unlocking new creative flows.

🎮 The Myth of the Magic Prompt

There’s a fantasy idea that a single perfect prompt will generate a production-ready app. But the truth is:

Prompts are starting points — not blueprints.

Even the best prompt needs:

- Context shaping (what the app does, who it’s for)

- Multiple iterations

- Testing and debugging

- Proper environment setup

- Tool orchestration (e.g., linking AI output to real APIs, databases, and frameworks)

Expecting ChatGPT or Copilot to deliver full SaaS from a one-liner is like asking Google Maps to build your house because it shows directions.

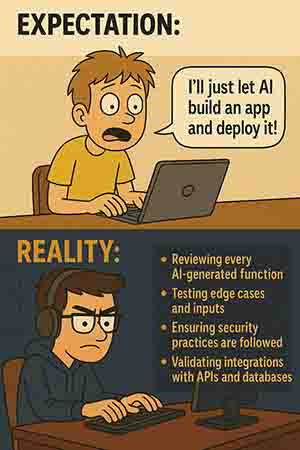

🧵 You Still Have to Test and Review Code

AI-generated code is never ready for deployment out of the box. Trusting raw output blindly is risky — and a clear sign of skipping responsibility.

AI won’t raise warnings if the logic is flawed. That’s still on the developer.

Building responsibly means:

- Reviewing every AI-generated function

- Testing edge cases and inputs

- Ensuring security practices are followed

- Validating integrations with APIs and databases

It takes real effort to transform a generated scaffold into a reliable, usable application.

🧵 Why Dismissing Without Testing is Worse

Critiquing AI dev tools without any hands-on use leads to outdated or lazy takes:

- “It hallucinates too much.” — Did you try fine-tuning or grounding with RAG?

- “It can’t scale.” — Did you deploy any AI-generated microservice?

- “It’s just autocomplete.” — Have you seen what LangChain or CrewAI workflows do?

There’s value in critique. But critiques without effort are just noise.

👩💼 What It Actually Takes to Build With AI

Anyone who’s shipped even a tiny AI-assisted project knows:

- You still plan architecture

- You still make tech stack decisions

- You still debug and test

- You still write glue code

What changes is how fast the path from idea to prototype can be.

📊 Visual: Where AI Helps in the App Stack

AI is a partner in the process — not a replacement for the process.

🔄 TL;DR

AI development isn’t magic. But dismissing it without trying is worse.

Building real apps still takes thought, effort, and experience. Prompts won’t replace developers, but they are changing how fast and how far ideas can go.

You can’t just let AI generate code and deploy it blindly. Every output must still be tested, reviewed, and integrated carefully. That process is effort — and it’s essential.

So before critiquing AI app dev, try building one. Even a small one. Then the feedback has weight — and maybe even insight.